The Death of Assessment: How LLMs Broke the Proof-of-Work System

January 15, 2025

A few weeks ago, a colleague at Atlassian mentioned something that stopped me cold. A new hire, fresh from their Master's program, had been casually bragging that AI had generated all the experimental data for their thesis. They hadn't run a single experiment. They hadn't written a single word themselves.

This isn't an isolated incident. It's a symptom of a fundamental shift that's broken the educational system's core assumption: that producing an artifact requires understanding the underlying concepts.

The Old Contract

For decades, universities operated on a simple premise: effort in, competence out. Students would struggle through assignments, research papers, and projects. The process was messy, time-consuming, and often frustrating. But that struggle was the point.

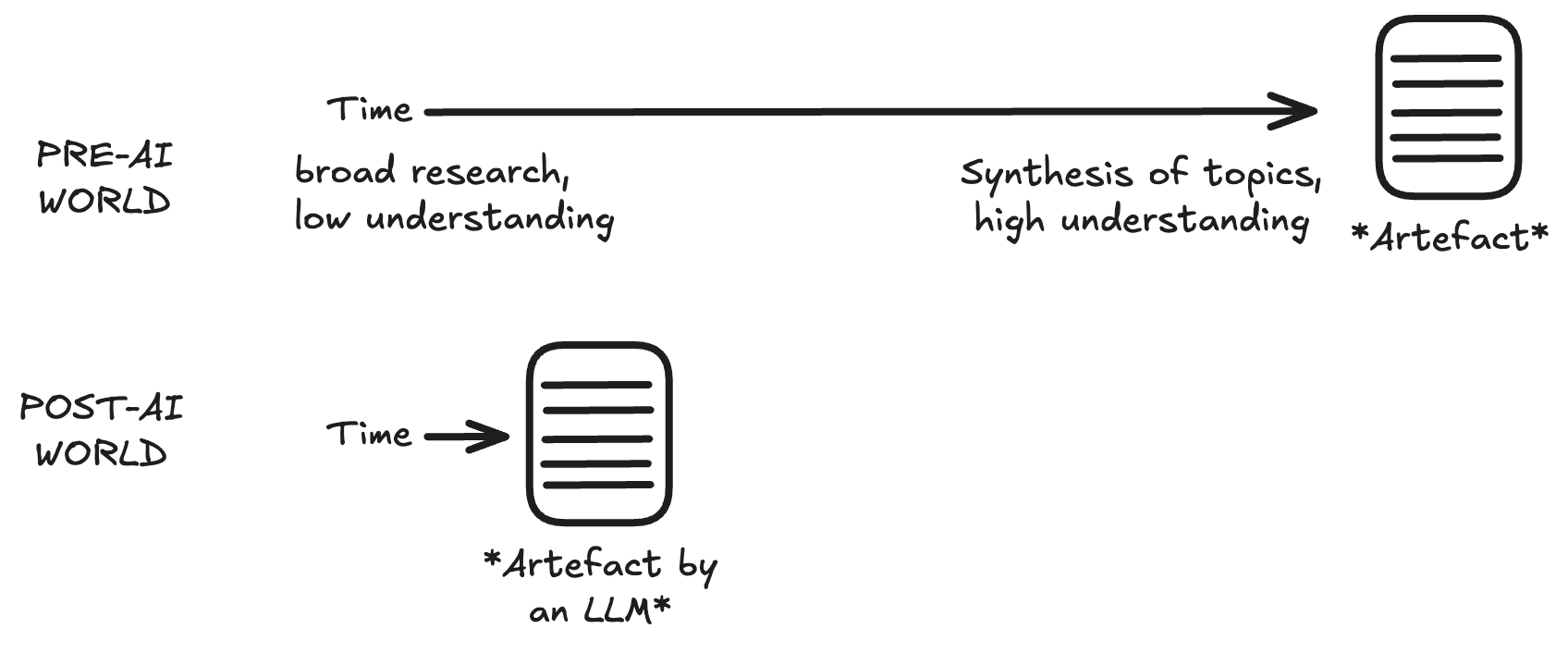

The artifact—the final paper, the completed assignment, the working code—served as proof that the student had wrestled with the material. It was a form of intellectual proof-of-work.

The fundamental shift: from learning through struggle to instant artifact generation

Combined with examinations, this dual system worked. Students could cheat on assignments, but the exam would expose their lack of understanding. They could memorize for exams, but assignments would test their ability to apply knowledge.

The New Reality

LLMs have fundamentally altered this equation. Students can now produce sophisticated artifacts—research papers, code, data analyses—without engaging with the underlying concepts. The proof-of-work is gone.

I witnessed this firsthand when helping a friend with a CSV analysis assignment. When I showed him how ChatGPT could parse his requirements, process the data, and generate visualizations in minutes, he was stunned: "Why didn't anyone tell me this was possible?"

The question haunted me. Why hadn't anyone told him? Why are universities still operating as if it's 2019?

The Missing Feedback Loop

The issue isn't that students are using AI—it's that universities have no visibility into how they're using it. When a student submits a perfectly formatted research paper, professors can't distinguish between:

- Constructive use: AI as a research assistant, helping with organization and clarity while the student drives the analysis

- Destructive use: AI as a ghostwriter, generating entire sections while the student remains passive

This blindness has created a crisis of confidence. Teaching assistants now expect most students to use AI for entire assignments and resort to one-on-one interviews to verify understanding. Professors abandon certain assignment types altogether, unsure how to maintain academic standards.

The result? We're losing half of our assessment framework. Assignments no longer serve as proof-of-work, but we're still pretending they do.

The Industry Disconnect

The consequences extend far beyond academia. Hiring managers report a growing disconnect between what graduates claim to know and what they can actually do. The carefully crafted portfolio that looked impressive on paper crumbles under basic technical questions.

One startup founder told me they've started giving candidates extremely basic coding tasks during interviews—not because they're testing for advanced skills, but because they need to verify that candidates can write code at all. The artifacts in their portfolios tell them nothing about actual competence.

The Path Forward: Visible AI

The solution isn't to ban AI—that ship has sailed. Students will use AI whether universities acknowledge it or not. The solution is to bring AI use into the light.

This is why we're building Kurnell. Instead of fighting AI, we're integrating it into the educational ecosystem in a way that preserves the proof-of-work principle:

- For students: AI that understands course materials and encourages iterative learning rather than one-shot solutions

- For universities: Analytics that reveal how students interact with AI—whether they're using it as a learning tool or a work-avoidance mechanism

The goal isn't to catch cheaters. It's to restore the feedback loop that makes assessment meaningful.

The Stakes

This isn't just about academic integrity—it's about the fundamental value of education. If universities can't distinguish between students who understand material and those who don't, what's the point of degrees?

The AI revolution is here. Universities can either adapt their assessment methods to work with this new reality, or watch their credibility erode as graduates enter the workforce unprepared for what they claim to know.

Kurnell is building the AI platform that helps universities navigate this transition. If you're interested in how we can help your institution adapt to the AI era, get in touch.

What's Next

The conversation about AI in education is just beginning. We're working with universities to pilot new assessment methods that work with AI rather than against it. The goal is to preserve what makes education valuable while embracing the tools that will define the future.

The proof-of-work system is broken. But that doesn't mean we can't build something better.